Projects

Tools and Approaches that Span across Projects

Uncovering the brain’s indexing system

Several previous neurosemantic studies have revealed important neurosemantic dimensions that underlie particular semantic domains. For example, the neural representations of emotions are organized around 3 factors: valence, intensity, and sociality (Kassam et al., 2013). The table below additionally describes the dimensions that underlie the domains of elementary physics concepts, abstract concepts, concrete object concepts, and concepts involved in simple narrative sentences.

The main indicator of what a factor codes is the ordering of the items by their factor scores. For example, emotion valence factor would presumably assign a large score to fury and a small score to calmness. A second indicator of what a factor codes is the location of the voxels that are strongly associated with (load heavily on) the factor. For example, the voxels strongly loading on the factor pertaining to body object interaction are located in motor and premotor brain regions, coding the body’s motor configuration or plan for interacting with a particular object.

All of the factors that have been uncovered so far reflect the aspects of the world that are useful to conceptualize and also reflect the plausible neural processing capabilities. Currently ongoing studies are investigating the neural representations of large numbers of concepts in several additional domains of knowledge, radically increasing the scale of the coverage of a neurosemantic space.

|

Domain |

Neurosemantic

Dimensions |

||

|

Emotions (Kassam et al., 2013) |

Valence |

Intensity |

Sociality |

|

Example items |

happy vs. sad |

fury vs. annoyance |

envy vs. sadness |

|

Concrete

objects (Just et al, 2010) |

Body-Object

Interaction |

Eating/Drinking |

Shelter/Enclosure |

|

Example items |

hammer vs pedal |

milk, apple |

tent, apartment |

|

Narrative sentences (Wang et al., 2017) |

People,

Social Interactions |

Spatial

Settings |

Actions,

Feelings |

|

Example items |

person, violence |

places, buildings |

emotions, arousal |

|

Physics

concepts (Mason & Just, 2016) |

Energy

Flow |

Motion

Visualization |

Periodicity/Oscillation |

|

Example items |

direct current |

torque |

frequency |

|

Abstract Concepts (Vargas & Just, 2019) |

Verbal

Representation |

Externality/Internality |

Social

Content |

|

Example items |

compliment |

spirituality |

gossip |

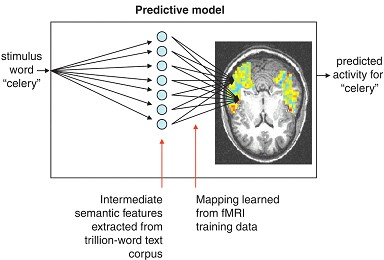

Predictive modeling. Our approach applies machine learning algorithms to fMRI data to identify neural patterns associated with individual concepts. The computer program learns a representation from a subset of our fMRI data and can then identify a novel brain image it has not yet seen. We have also developed a computational model that predicts fMRI activation of words for which fMRI data are not yet available. By leaving out different subsets of images to test the program, we obtain a measure of how well our algorithms can generalize to new data, or an independent subset of the same participant's data. The predictions consistently achieve a high level of accuracy, and generalize in interesting ways: we can obtain successful predictions when training on a different language in bilingual participants, when training on one modality to predict another (words and pictures), and training on one study to predict another study with different stimuli.

This stylized depiction of the neural representation of the concept of GRAVITY is going to be included in the first museum on the moon.

This stylized depiction of the neural representation of the concept of GRAVITY is going to be included in the first museum on the moon. Carnegie Mellon’s Robotic Institute will send a rover to the moon which will remain there indefinitely, housing a non-encyclopedic view of humanity and life on earth – the MoonArk – intended to be a Cultural Heritage Site. The MoonArk contains elements representing all the Arts and Humanities. The CCBI was invited to contribute a brain activation pattern corresponding to a moon-relevant concept.

To provide permanence, the brain activation pattern was etched in platinum on sapphire disks using an industrial 3-D printing process and included in the capsule for visitors to the moon to appreciate in the indefinite future.

The 5 images, from left to right depict activation as gold highlights, left hemisphere dominant, in the following perspectives: Left sagittal, frontal coronal, right sagittal, posterior coronal, superior axial